Day 2: The 4 Types of Agentic Systems (and When to Use What)

Welcome to Day 2 of Building Agentic AI Applications!

Missed previous days’ posts? Find them here

Yesterday, we looked at what makes AI agentic, it’s not just about understanding/generating content, it’s about performing actions and handling tasks end-to-end.

But as teams rush to “add agents” to their stack, here’s the catch:

Not all agents are built the same, and not all problems need highly autonomous systems.

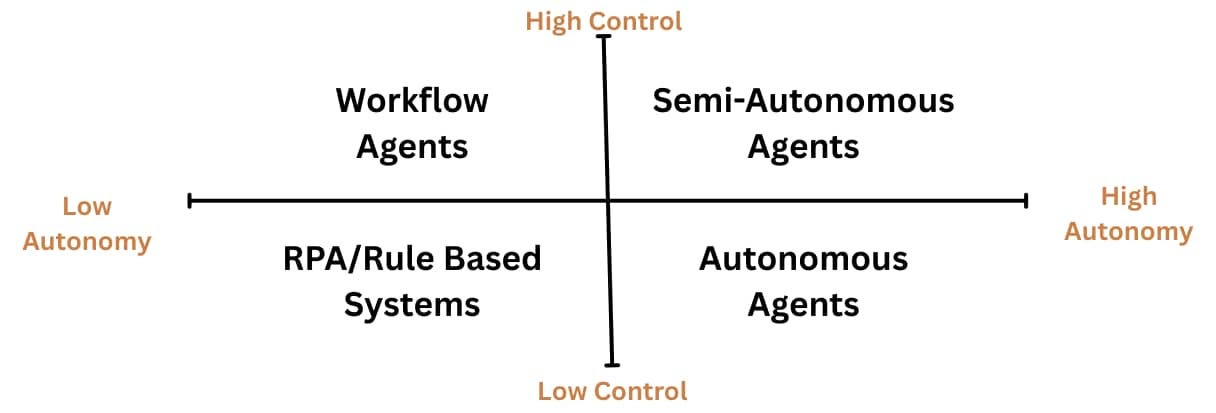

In this lesson, we’ll walk through four types of agentic systems (as discussed yesterday), using a simple but powerful lens:

How much autonomy does the agent have?

How much control does the human or system retain?

This balance impacts how the system behaves, how you evaluate it, and what infrastructure you need to build.

But first, a quick foundation.

The Tool-Augmented LLM

At the core of most modern agents is an LLM (Large Language Model) acting as the brain of the system. Throughout this course, we use the term LLM to refer broadly to generative AI models—not just text-only models.

On its own, it’s capable of generating content, but to turn it into an agent, you augment it with:

Tools: APIs, functions, databases it can call

Planning: The ability to break a goal into multiple steps

Memory: So it can track past actions and outcomes

State and Control Logic: To know what’s done, what failed, and what to do next

When connected to these components, the LLM becomes more than a chatbot. It becomes a goal-driven system that can reason, take action, and adapt.

But depending on how much you trust it to act without supervision, you end up with different types of agents.

Let’s walk through them, starting from the least autonomous.

1. Rule-Based Systems/Agents

Low Autonomy, Low Control

These systems don’t use LLMs at all. They’re built with traditional if-this-then-that logic. Every decision path is manually scripted. There’s no reasoning or learning. Rule based Agents have existed much before the LLM era.

Wait, aren’t we talking about AI agents? Well, yes—but not every problem needs an AI model. Remember: start with the problem, not the AI. If you can solve it without AI, don’t overcomplicate it.

What problems do they solve?

Well-structured, repetitive tasks with fixed inputs and outputs.

Examples:

Automatically approve reimbursements under a fixed amount

Rename files in a folder based on filename patterns

Copy data from Excel sheets into form fields

Pros: Fast, auditable, predictable

Cons: Brittle to change, can’t handle ambiguity

Best used when: You know all the conditions ahead of time and there’s no need for flexibility.

2. Workflow Agents

Low Autonomy, High Control

This is often the first step for enterprises introducing LLMs into their workflows. Here, the LLM enhances an existing workflow but doesn’t execute actions independently. A human stays in control.

What problems do they solve?

Repetitive tasks that benefit from natural language understanding, summarization, or generation, but still need human decision-making.

Examples:

Suggesting first-draft responses in a support tool like Zendesk

Generating summaries of meeting transcripts

Translating natural language queries into structured search inputs for BI dashboards

How the LLM is used:

It reads input (text, tickets, documents), understands context, and generates useful content, but doesn’t act on it. A human still decides what to do.

Pros: Easy to deploy, low risk, quick value

Cons: Can’t execute or plan, limited end-to-end value

Best used when: You want to augment your team’s productivity without giving up oversight.

3. Semi-Autonomous Agents

Moderate to High Autonomy, Moderate Control

These are true agentic systems. They not only understand tasks but can plan multi-step actions, invoke tools, and complete goals with minimal supervision. However, they often operate with some constraints or monitoring built in.

What problems do they solve?

Multi-step workflows that are well-understood but too tedious or time-consuming for humans.

Examples:

A lead follow-up agent that drafts, personalizes, and sends emails based on CRM data, while logging results

A document automation agent that extracts details from contracts and updates internal systems

A research agent that pulls data from multiple sources, compares findings, and sends a structured report

How the LLM is used:

The LLM plans the steps, calls APIs to fetch or push data, keeps track of progress, and adapts if something goes wrong. It often includes fallback paths or checkpoints for human review.

Pros: Automates complex workflows, saves time, higher ROI

Cons: Needs infrastructure (planning, memory, tool calling), harder to test

Best used when: You want to automate well-bounded business workflows while retaining some control.

4. Autonomous Agents

High Autonomy, Low Control

These agents are fully goal-driven. You give them a broad objective, and they figure out what to do, how to do it, when to retry, and when to escalate. They act independently, often across systems and over time.

What problems do they solve?

High-effort, async, or long-running tasks that span multiple systems or steps and don’t need constant human input.

Examples:

A competitive research agent that pulls data over days, summarizes updates, and generates weekly insight briefs

An ops automation agent that detects issues in pipelines, diagnoses root causes, and files tickets with suggested fixes

A testing agent that autonomously runs product flows, logs results, and suggests new edge-case scenarios

How the LLM is used:

The LLM is the planner, decision-maker, tool-user, memory tracker, and communicator. It manages retries, evaluates whether goals are met, and decides when to stop or adapt.

Pros: Extremely scalable, can handle complex tasks

Cons: High risk if not monitored, hard to evaluate or trace, infra-heavy

Best used when: The task is high-leverage, async, and doesn’t require human feedback at every step.

So how do you decide what type of agent to build?

Not by picking your favorite architecture

You start with the problem.

Is it repetitive and structured?

Does it involve language understanding or generation?

Is it a multi-step task that needs decision-making?

Do you trust an AI system to execute the entire task, or do you want a human in the loop?

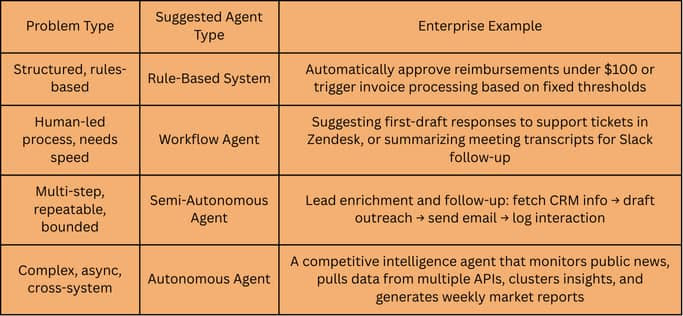

Here’s a simple mapping to guide you:

Before we wrap up, two important points:

These approaches aren’t mutually exclusive, a single system can use a mix of them. Some parts might require high control, while others can benefit from high autonomy. Think of them as options you can apply to different parts of a problem.

Each problem type can be tackled by either a single agent or a group of collaborating agents.

We’ll dive deeper into single-agent vs. multi-agent design later in the course. But for now, just remember:

Don’t start with “How do I build a multi-agent system?”

Start with “What’s the problem I’m solving, and what kind of autonomy does it require?”

Let the problem shape the agentic design, not the other way around.

Tomorrow, at the same time, we’ll dive deeper into the role of tools in agentic systems. They’re the reason AI has become far more usable—and we’ll break down exactly how and why in our deep dive.

-Aish

----------------------------------------------------------------------------------------------------

All the frameworks and abstractions you've seen above are original, developed through hands-on experience building over 100 real-world AI applications across enterprise settings. You won’t find them packaged like this anywhere else online.

If this approach resonates with you, and you're serious about learning how to design agents that actually work in production, not just hype demos, check out our top-rated 6-week course here!

Use the code 10DAY for a limited time 10% off!

It's built for all backgrounds, whether you're a Product Manager, Architect, Director, C-suite leader, or someone exploring agentic AI with intent. Let’s move beyond the buzzwords and into systems that solve real problems!